The Covid-19 effect in Virtual Consults

With the global impact of Covid 19 and local curfew measures many medical specialists, including plastic and cosmetic surgeons have made increased use of virtual consultations. While practices were closed, plastic surgeons continued to stay in touch with patients via virtual visits.

The ASPS conducted a survey in 2020 among their members and found out that:

-

- 64% had already seen an increase in virtual consultations even before the beginning of Covid-19

- 68% started virtual consultations because of Covid-19

Using Deep Learning to detect Liposuction Areas

We trained a deep learning model to recognize potential liposuction areas in patient photos. The model has been incorporated in our new version of the Virtual Consultation to educate patients on which areas they could have liposuction done. Instead of having patients choosing problem areas from a simple list of checkboxes or by clicking on a stock image of a human model, we use AI to show them their actual body areas and mark those areas where they can might have lipo.

The motivation

Our motivation was to improve the current version of our online virtual consultation. The current BBL virtual consultation consists of a basic contact info form and an option to upload photos or use directly the smartphone camera to take fresh pictures. In one of the steps, we ask the patient to select from a list of body areas which ones are they interested in having treated.

We felt that we could go further and use the power of deep learning image recognition to give patients an added value that other virtual consultations had not done.

The Process

Our work is relies on the the libraries provided by Facebook’s object detection platform called “Detectron2”. We used the libraries and base object detection models provided in Detectron2 as the starting platform.

Image 1 : Detectron2 -a Facebook computer vision library used in Object / Segment detection

We trained our own Detectron2 Model using over 2500 before and after and virtual consultation pictures.

Gathering the Data

We used before and after pictures from our doctors, and pictures collected from virtual consultations. In total we had over 10k images to start.

To make our work easy we had to organize the images into 3 main views: Front, Side and Back. Since organizing manually over 10,000 images would take many days. We created a separate AI view detection model to organize images according to the position of the person in the picture . In this case we trained the view detection model with over 450 pictures. We took 150 fronts, 150 back and 150 side views. We then let view detection model organize the 10k images into 1 of the 3 view types.

Labeling the Data

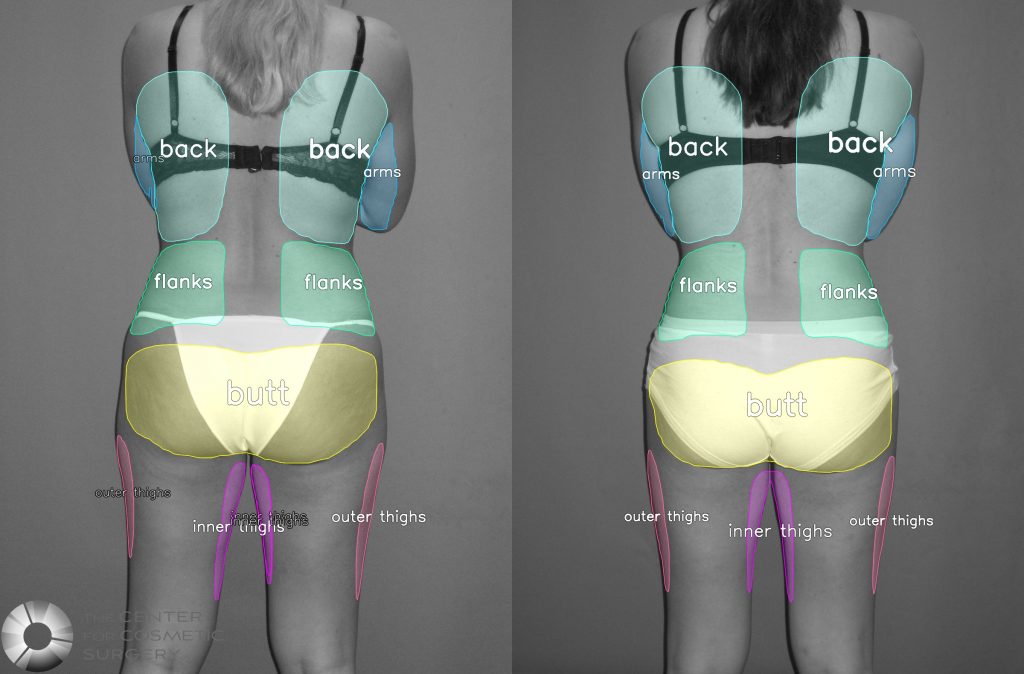

After having all images organized into the 3 views – Front, Side & Back, we selected a few hundred to label the body areas.

In a sum the main training data for our body areas detection model was: A few hundred pictures + a json file for each picture containing the 2d-polygon coordinates for the drawn body areas.

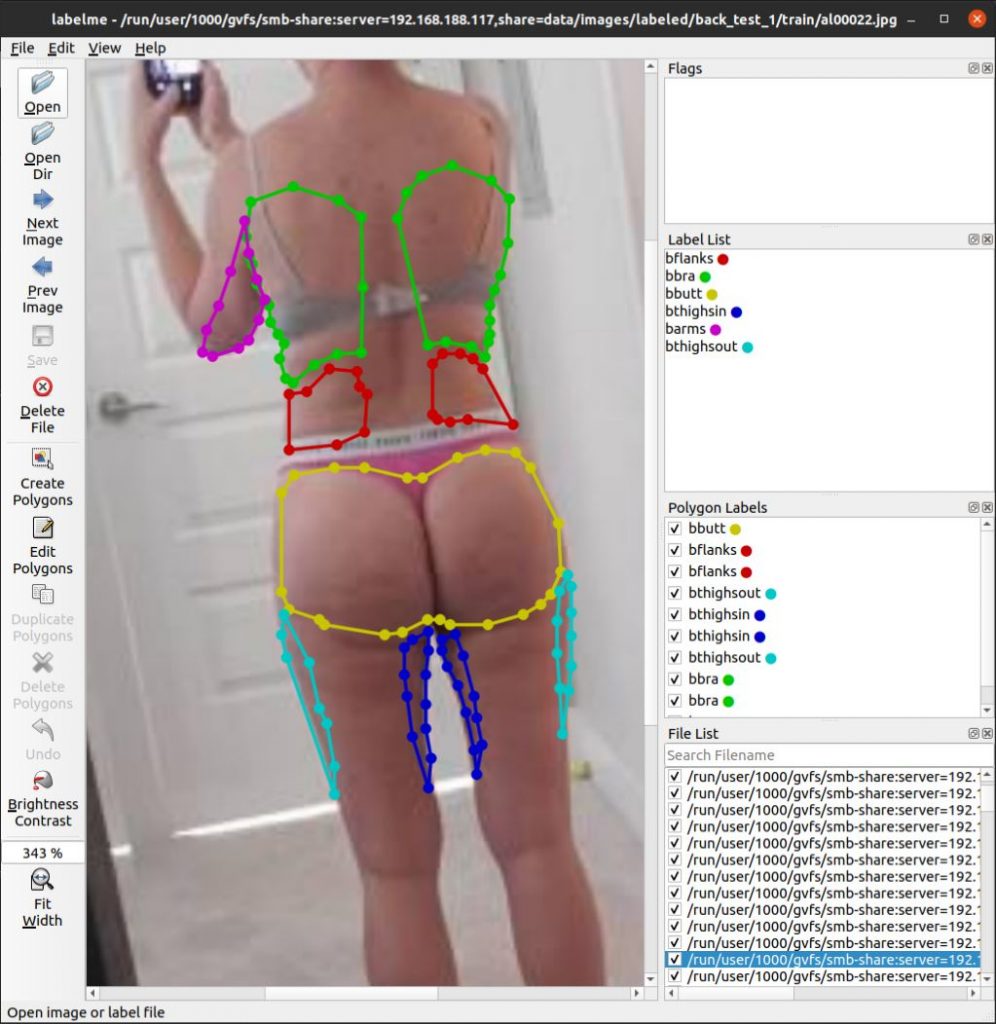

Image 2: We used the annotation tool (Labelme) to draw polygons over each body area we want to later detect. The Back area is show in green, flanks in red, butt in yellow, outer thighs in cyan, inner thighs in blue and upper arms in magenta.

Labeling the data is an arduous manual process – it takes time, precision and patience to draw polygons using the mouse to mark the different body areas.

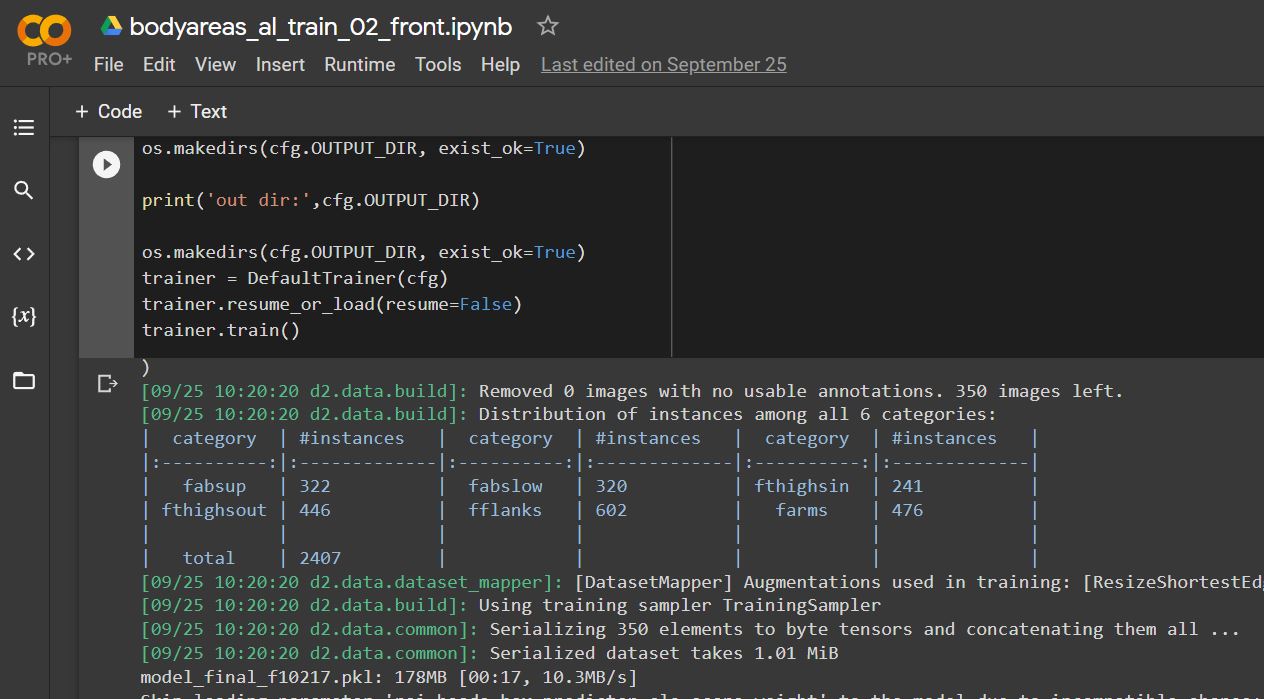

Training the Model

After having all the 2500 pictures + json pairs – we segmented them in 2 sets – a training set containing 2407 pairs and the remaining 93 pairs were used as a validation set during training.

Image 3: The training was done in Google Colab using a Tesla V100 GPU (16 GB Ram)

The total train time took about 1 hour – thanks to the GPU. The same training was done offline without a GPU – and it took over 10 hours. Using a powerful GPU is definitely a valuable asset to train models much faster.

Testing the Model

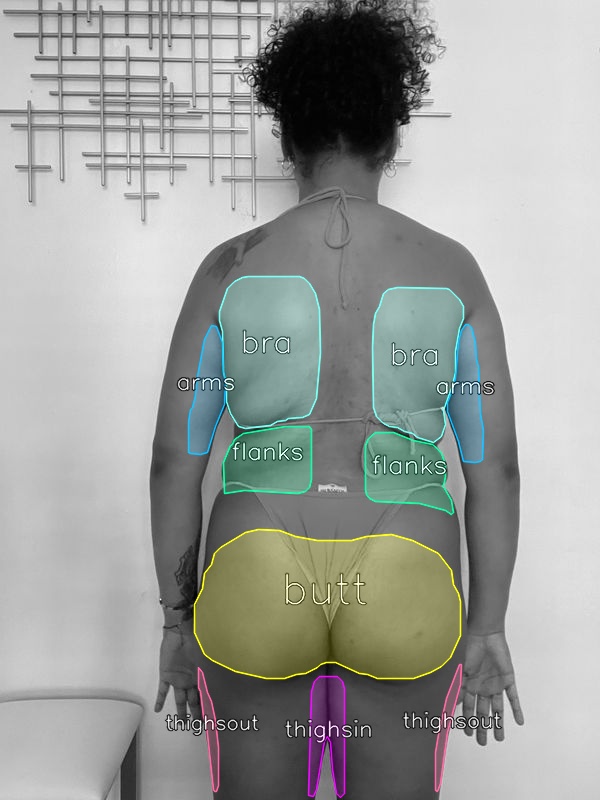

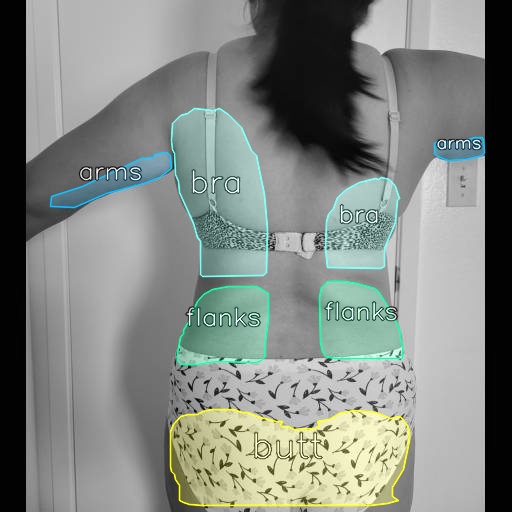

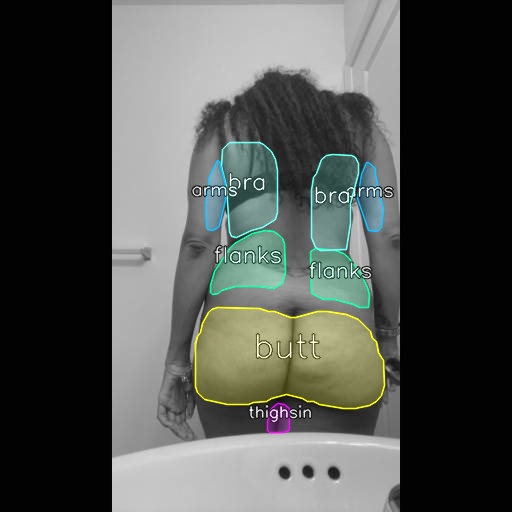

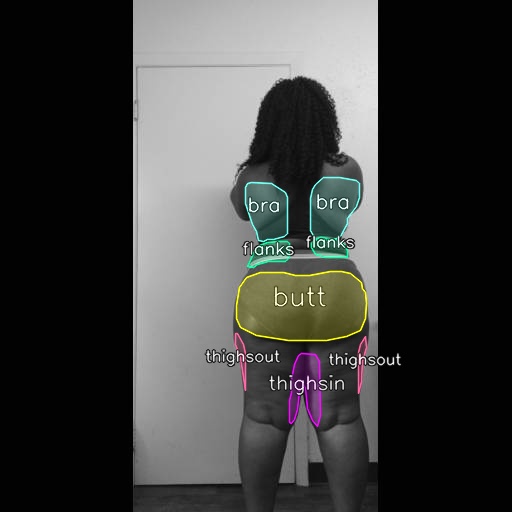

We tested the trained lipo areas detection model – with several before and after pictures and virtual consultation selfies.

Testing Before and Afters with a plain background

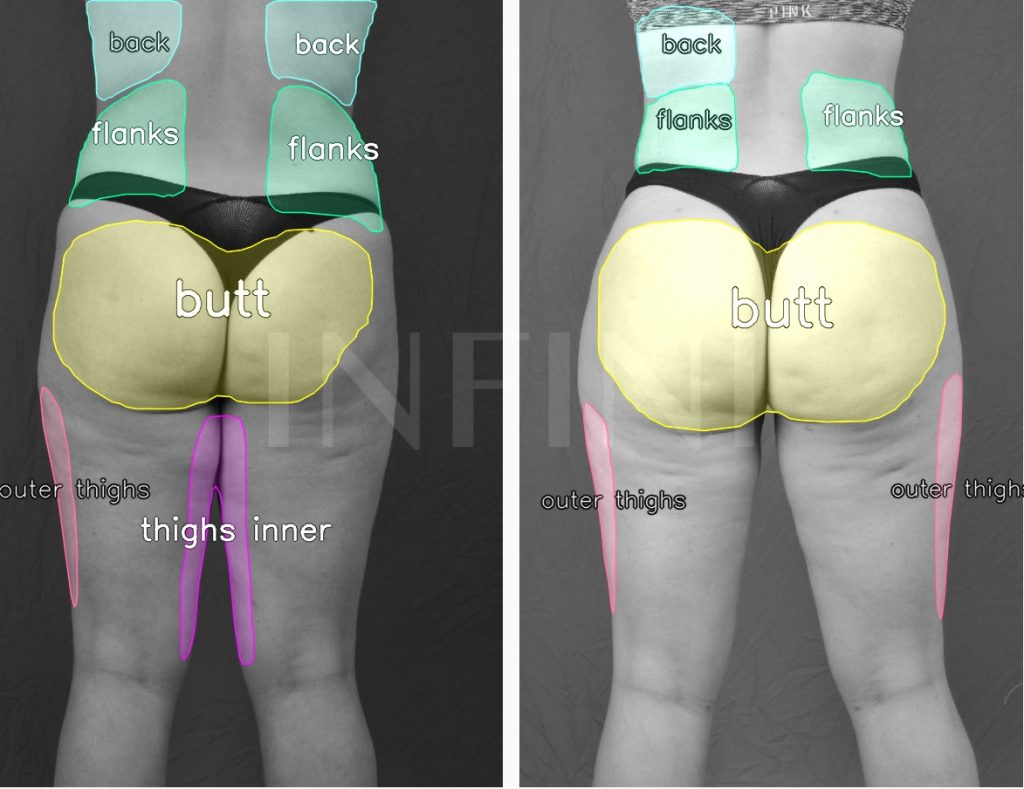

Testing Before and Afters with a plain background and a watermark (courtesy – Infiniskin.com)

Testing Virtual Consutation Selfies (smartphone pictures) with a complex backgrounds. The model proved to be effective also with regular selfie style pictures taken with a regular smartphone camera. Here are some of the results.